Paper

Available on the ACL Anthology: link

Slides CitationMustafa Sercan Amac, Semih Yagcioglu, Aykut Erdem, and Erkut Erdem. "Procedural Reasoning Networks for Understanding Multimodal Procedures", In CoNLL 2019.

Bibtex

Abstract

With this work address the problem of comprehending procedural commonsense knowledge. This is a challenging task as it requires identifying key entities, keeping track of their state changes, and understanding temporal and causal relations. Contrary to most of the previous work, in this study, we do not rely on strong inductive bias and explore the question of how multimodality can be exploited to provide a complementary semantic signal. Towards this end, we introduce a new entity-aware neural comprehension model augmented with external relational memory units. Our model learns to dynamically update entity states in relation to each other while reading the text instructions. Our experimental analysis on the visual reasoning tasks in the recently proposed RecipeQA dataset reveals that our approach improves the accuracy of the previously reported models by a large margin. Moreover, we find that our model learns effective dynamic representations of entities even though we do not use any supervision at the level of entity states.

Introduction

A great deal of commonsense knowledge about the world we live is procedural in nature and involves steps that show ways to achieve specific goals. Understanding and reasoning about procedural texts (e.g. cooking recipes, how-to guides, scientific processes) are very hard for machines as it demands modeling the intrinsic dynamics of the procedures. That is, one must be aware of the entities present in the text, infer relations among them and even anticipate changes in the states of the entities after each action.

In recent years, tracking entities and their state changes have been explored in the literature from a variety of perspectives. In an early work, Henaff et al. (2017) [1] proposed a dynamic memory based network which updates entity states using a gating mechanism while reading the text. Bansal et al. (2017) [2] presented a more structured memory augmented model which employs memory slots for representing both entities and their relations. Pavez et al. (2017) [3] suggested a conceptually similar model in which the pairwise relations between attended memories are utilized to encode the world state.

Perez and Liu (2017) [4] showed that similar ideas can be used to compile supporting memories in tracking dialogue state. Wang et al. (2017) [5] has shown the importance of coreference signals for reading comprehension task. More recently, Dhingra et al. (2018) [6] introduced a specialized recurrent layer which uses coreference annotations for improving reading comprehension tasks. On language modeling task, Ji et al. (2017) [7] proposed a language model which can explicitly incorporate entities while dynamically updating their representations for a variety of tasks such as language modeling, coreference resolution, and entity prediction.

Our work builds upon and contributes to the growing literature on tracking states changes in procedural text. Bosselut et al. (2018) [8] presented a neural model that can learn to explicitly predict state changes of ingredients at different points in a cooking recipe. Dalvi et al. (2018) [9] proposed another entity-aware model to track entity states in scientific processes. Tandon et al. (2018) [10] demonstrated that the prediction quality can be boosted by including hard and soft constraints to eliminate unlikely or favor probable state changes. In a follow-up work, Du et al. (2019) [11] exploited the notion of label consistency in training to enforce similar predictions in similar procedural contexts. Das et al. (2019) [12] proposed a model that dynamically constructs a knowledge graph while reading the procedural text to track the ever-changing entities states.

To mitigate the aforementioned challenges, the existing works rely mostly on heavy supervision and focus on predicting the individual state changes of entities at each step. Although these models can accurately learn to make local predictions, they may lack global consistency [10, 11] , not to mention that building such annotated corpora is very labor-intensive. As discussed earlier these previous methods use a strong inductive bias and assume that state labels are present during training. In our study, we deliberately focus on unlabeled procedural data and ask the question: Can multimodality help to identify and provide insights to understanding state changes. Hence, take a different direction by exploring the problem from a multimodal standpoint.

Procedural Reasoning Networks (PRN)

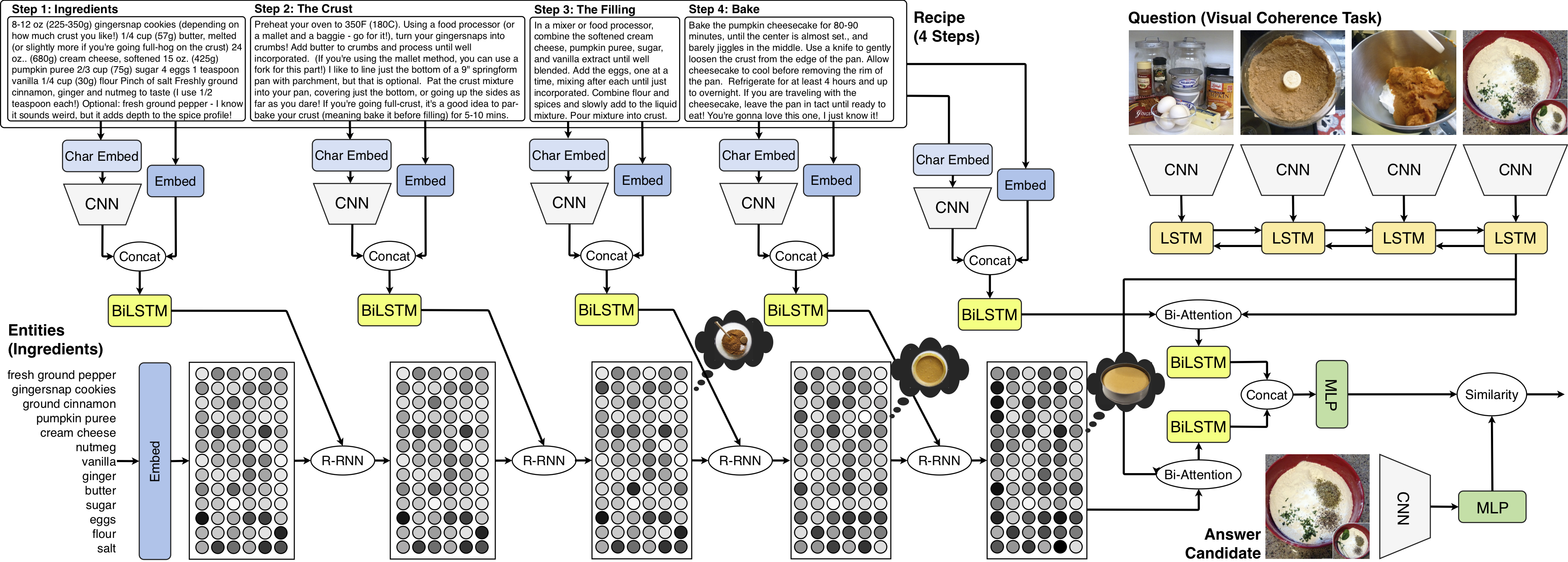

We follow a simple framework and employ PRN model for comprehending procedural text. In that regard we use 3 visual tasks from RecipeQA dataset, namely visual cloze, visual coherence and ordering tasks. The goal of these task is to provide correct answers for each question. For instance as described in the 2, our model reads a recipe in a step-by-step manner and while reading the recipe, internally update the entity states based on the new information. Finally, the model decides which answer should be the correct one based on the question asked to the model.

Our model consists of five main modules: An input module, an attention module, a reasoning module, a modeling module, and an output module. Note that the question answering tasks we consider here are multimodal in that while the context is a procedural text, the question and the multiple choice answers are composed of images.

We have five modules in the proposed PRN model as described below.

- Input Module extracts vector representations of inputs at different levels of granularity by using several different encoders.

- Reasoning Module scans the procedural text and tracks the states of the entities and their relations through a recurrent relational memory core unit [13].

- Attention Module computes context-aware query vectors and query-aware context vectors as well as query-aware memory vectors.

- Modeling Module employs two multi-layered RNNs to encode previous layers outputs.

- Output Module scores a candidate answer from the given multiple-choice list.

Results

| Single-task Training | Multi-task Training | ||||||||||||||||

| Model | Cloze | Coherence | Ordering | Average | Cloze | Coherence | Ordering | All | |||||||||

| Human* | 77.60 | 81.60 | 64.00 | 74.40 | – | – | – | – | |||||||||

| Hasty Student | 27.35 | 65.80 | 40.88 | 44.68 | – | – | – | – | |||||||||

| Impatient Reader | 27.36 | 28.08 | 26.74 | 27.39 | – | – | – | – | |||||||||

| BIDAF | 53.95 | 48.82 | 62.42 | 55.06 | 44.62 | 36.00 | 63.93 | 48.67 | |||||||||

| BIDAF w/ static memory | 51.82 | 45.88 | 60.90 | 52.87 | 47.81 | 40.23 | 62.94 | 50.59 | |||||||||

| PRN | 56.31 | 53.64 | 62.77 | 57.57 | 46.45 | 40.58 | 62.67 | 50.17 | |||||||||

| *Taken from the RecipeQA project website, based on 100 questions sampled randomly from the validation set. | |||||||||||||||||

Interactive Demo

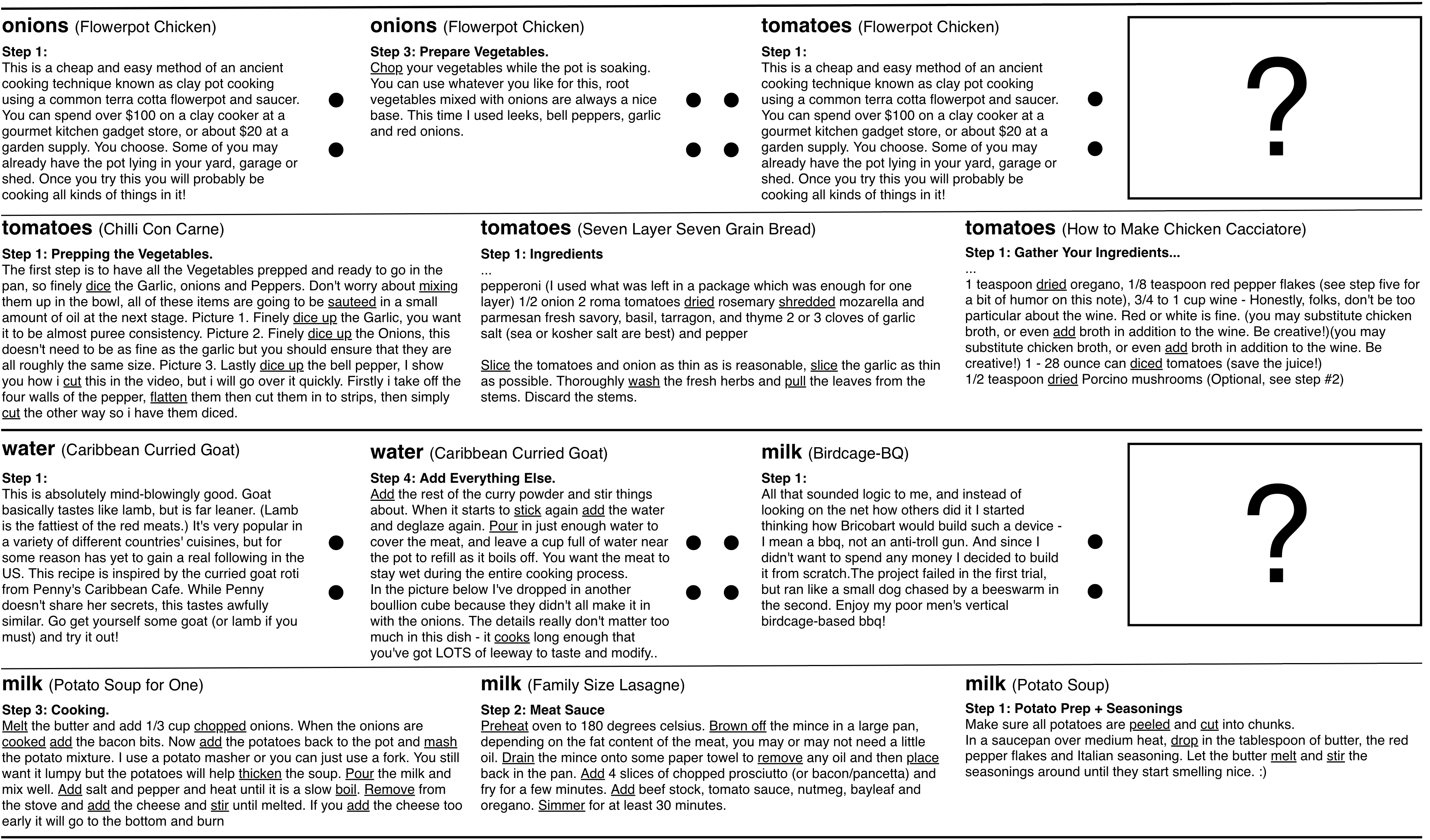

Visual Reasoning Tasks in RecipeQA

Entity Embedding Arithmetics

Step-aware entity representations can be used to discover the changes occurred in the states of the ingredients between two different recipe steps. The difference vector between two entities can then be added to other entities to find their next states.| Entity Embeddings | Step Descriptions | ||

|---|---|---|---|

| + | |||

| - | |||

Click here to explore dynamic entity embedding in Tensorflow Projector.

Conclusion

We have presented a new neural architecture called Procedural Reasoning Networks (PRN) for multimodal understanding of step-by-step instructions. Our proposed model is based on the successful BiDAF framework but also equipped with an explicit memory unit that provides an implicit mechanism to keep track of the changes in the states of the entities over the course of the procedure. Our experimental analysis on visual reasoning tasks in the RecipeQA dataset shows that the model significantly improves the results of the previous models, indicating that it better understands the procedural text and the accompanying images. Additionally, we carefully analyze our results and find that our approach learns meaningful dynamic representations of entities without any entity-level supervision. Although we achieve state-of-the-art results on RecipeQA, clearly there is still room for improvement compared to human performance.

Acknowledgments

This work was supported by TUBA GEBIP fellowship awarded to E. Erdem; and by the MMVC project via an Institutional Links grant (Project No. 217E054) under the Newton-Katip Çelebi Fund partnership funded by the Scientific and Technological Research Council of Turkey (TUBITAK) and the British Council. We also thank NVIDIA Corporation for the donation of GPUs used in this research.

References

- Tracking The World State with Recurrent Entity Networks

Henaff, M., Weston, J., Szlam, A., Bordes, A. and LeCun, Y., 2017. Proceedings of the International Conference on Learning Representations (ICLR). - RelNet: End-to-End Modeling of Entities & Relations

Bansal, T., Neelakantan, A. and McCallum, A., 2017. NeurIPS Workshop on Automated Knowledge Base Construction (AKBC). - Working Memory Networks: Augmenting Memory Networks with a Relational Reasoning Module

Pavez, J., Allende, H. and Allende-Cid, H., 2018. Proceedings of the Annual Meeting of the Association for Computational Linguistics (ACL), pp. 1000--1009. - Dialog state tracking, a machine reading approach using Memory Network

Perez, J. and Liu, F., 2017. Proceedings of the 15th Conference of the European Chapter of the Association for Computational Linguistics: Volume 1, Long Papers, pp. 305--314. - Emergent Predication Structure in Hidden State Vectors of Neural Readers

Wang, H., Onishi, T., Gimpel, K. and McAllester, D., 2017. Proceedings of the 2nd Workshop on Representation Learning for NLP, pp. 26--36. Association for Computational Linguistics. - Neural Models for Reasoning over Multiple Mentions using Coreference

Dhingra, B., Jin, Q., Yang, Z., Cohen, W.W. and Salakhutdinov, R., 2018. Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL-HLT). - Dynamic Entity Representations in Neural Language Models

Ji, Y., Tan, C., Martschat, S., Choi, Y. and Smith, N.A., 2017. Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP). - Simulating Action Dynamics with Neural Process Networks

Bosselut, A., Ennis, C., Levy, O., Holtzman, A., Fox, D. and Choi, Y., 2018. Proceedings of the International Conference on Learning Representations (ICLR). - Tracking State Changes in Procedural Text: a Challenge Dataset and Models for Process Paragraph Comprehension

Dalvi, B., Huang, L., Tandon, N., Yih, W. and Clark, P., 2018. Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies. - Reasoning about Actions and State Changes by Injecting Commonsense Knowledge

Tandon, N., Dalvi, B., Grus, J., Yih, W., Bosselut, A. and Clark, P., 2018. Proceedings of the Conference on Empirical Methods in Natural Language Processing (EMNLP). - Be Consistent! Improving Procedural Text Comprehension using Label Consistency

Du, X., Mishra, B.D., Tandon, N., Bosselut, A., Yih, W., Clark, P. and Cardie, C., 2019. Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies (NAACL-HLT). - Building Dynamic Knowledge Graphs from Text using Machine Reading Comprehension

Das, R., Munkhdalai, T., Yuan, X., Trischler, A. and McCallum, A., 2019. Proceedings of the International Conference on Learning Representations (ICLR). - Relational Recurrent Neural Networks

Santoro, A., Faulkner, R., Raposo, D., Rae, J., Chrzanowski, M., Weber, T., Wierstra, D., Vinyals, O., Pascanu, R. and Lillicrap, T., 2018. Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), pp. 7299--7310.

We used distill.pub project template for web site design.